Sagar Prakash Barad

ML Researcher

NISER Graduate Student

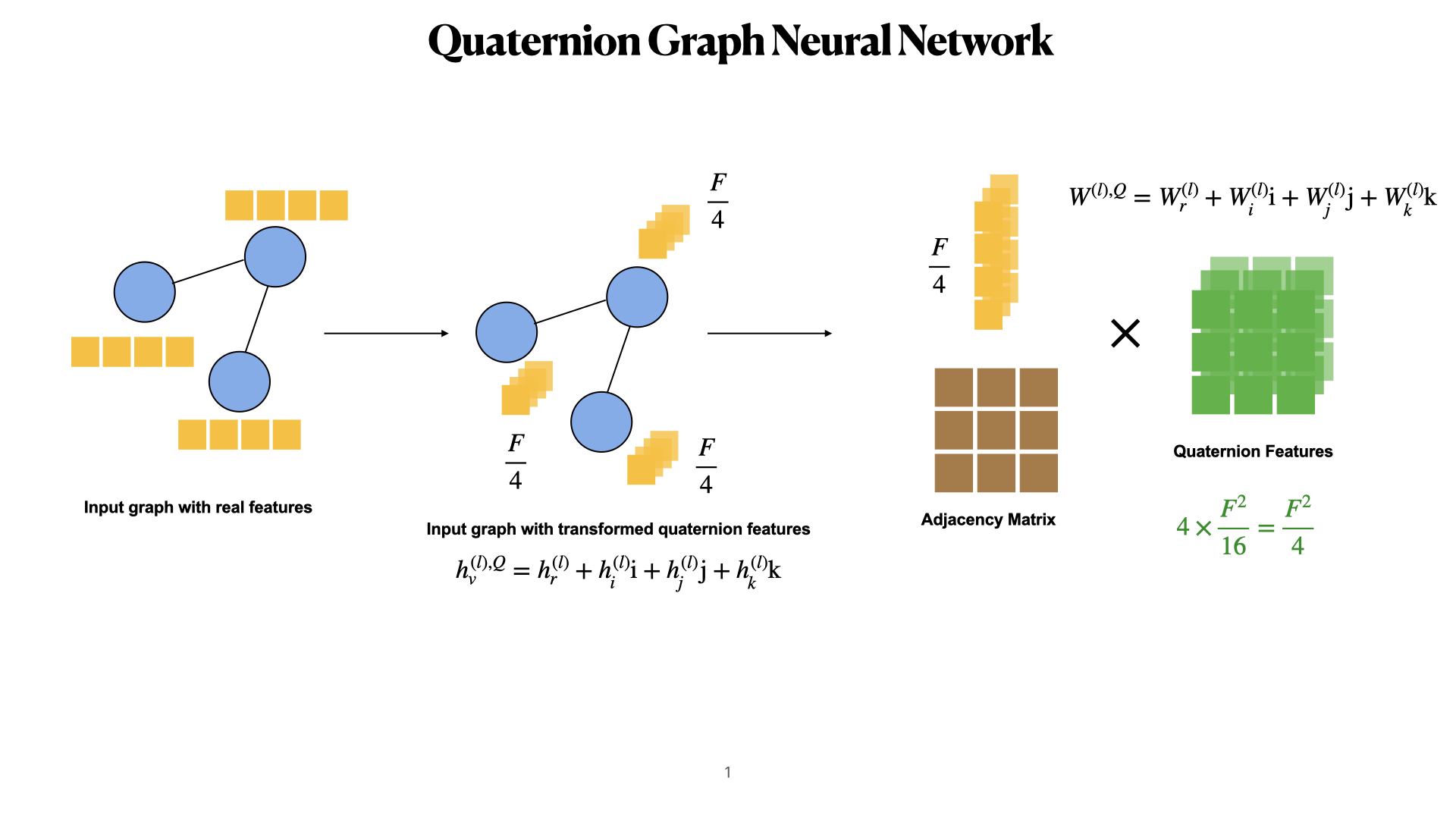

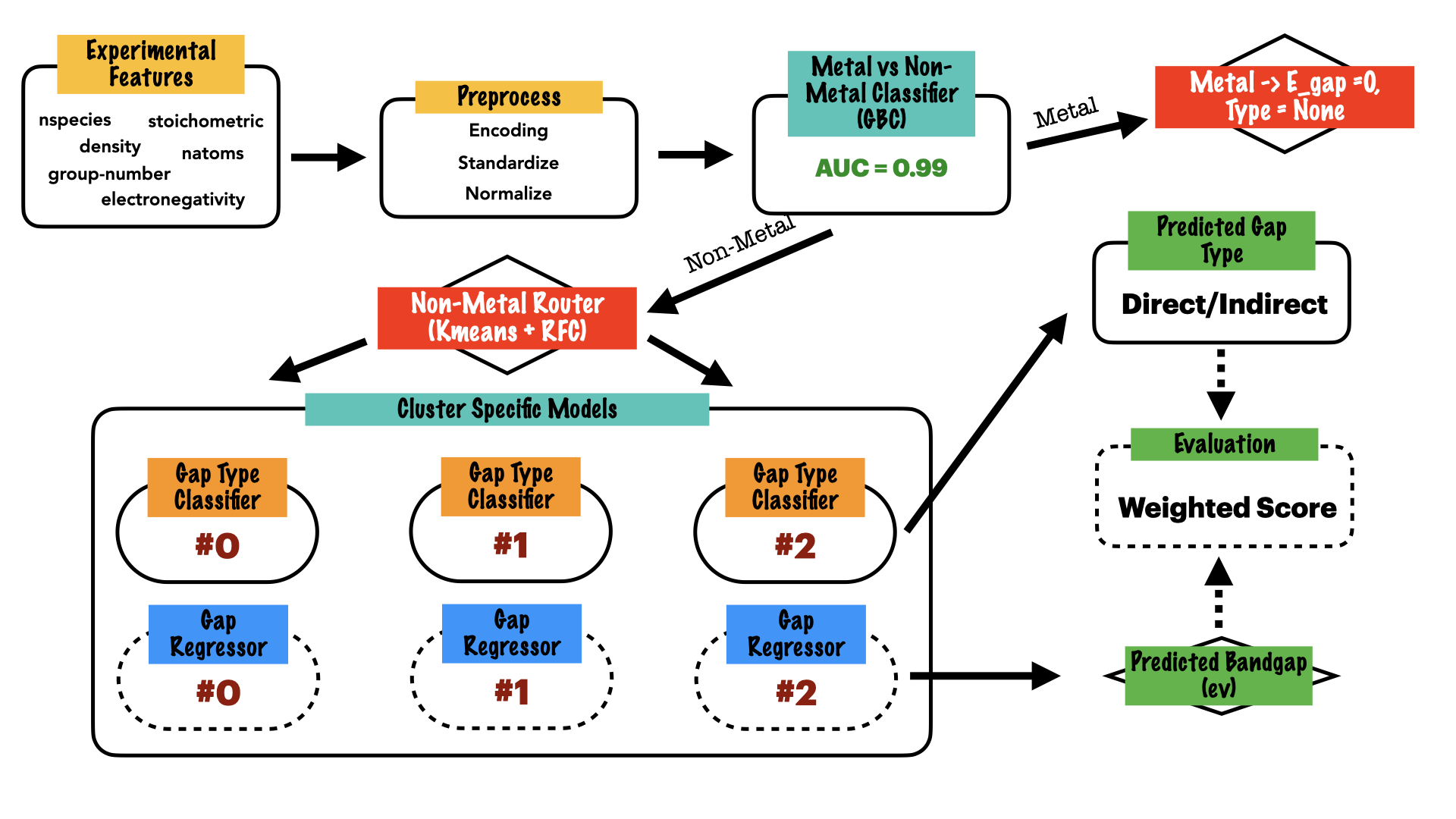

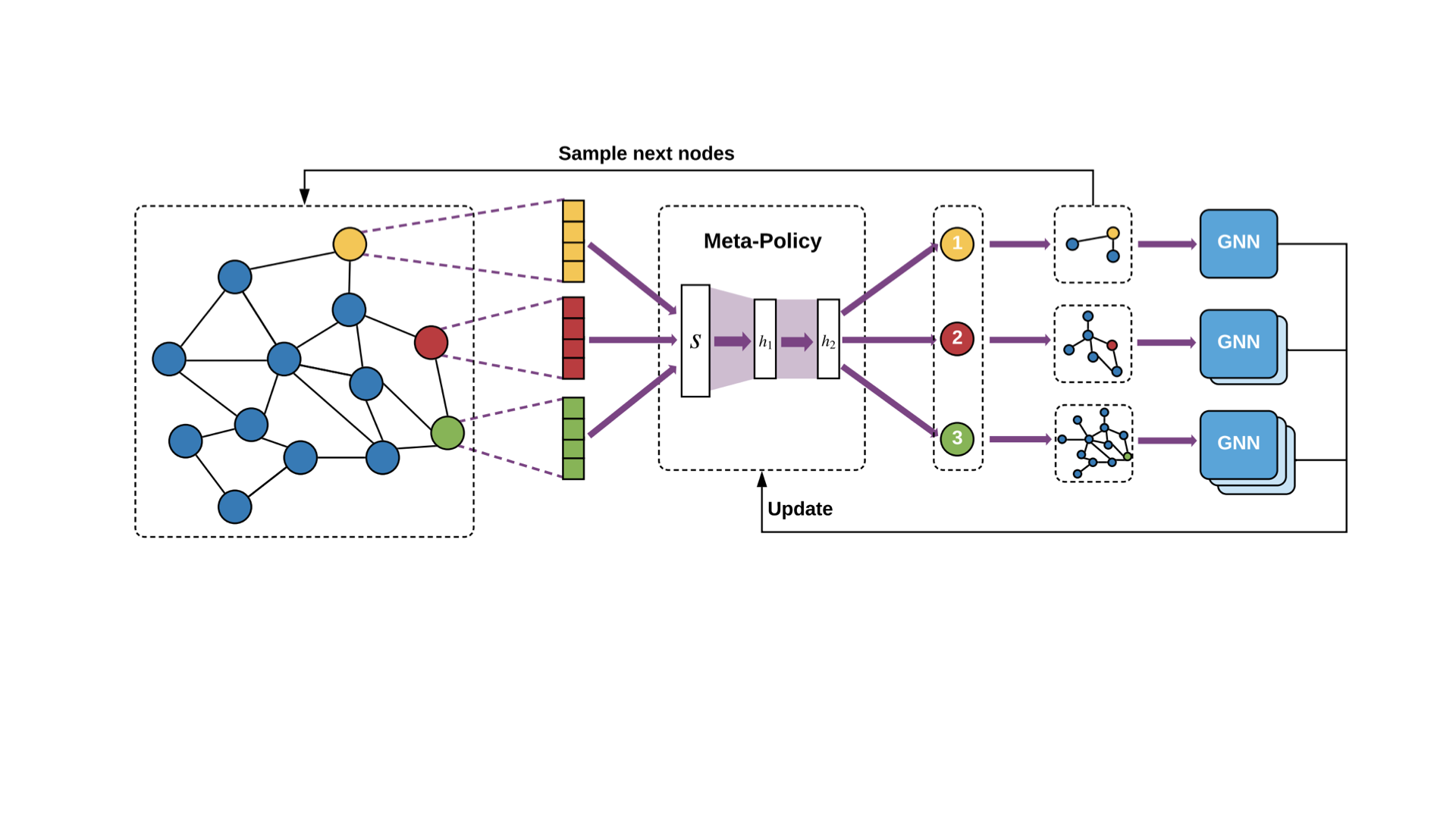

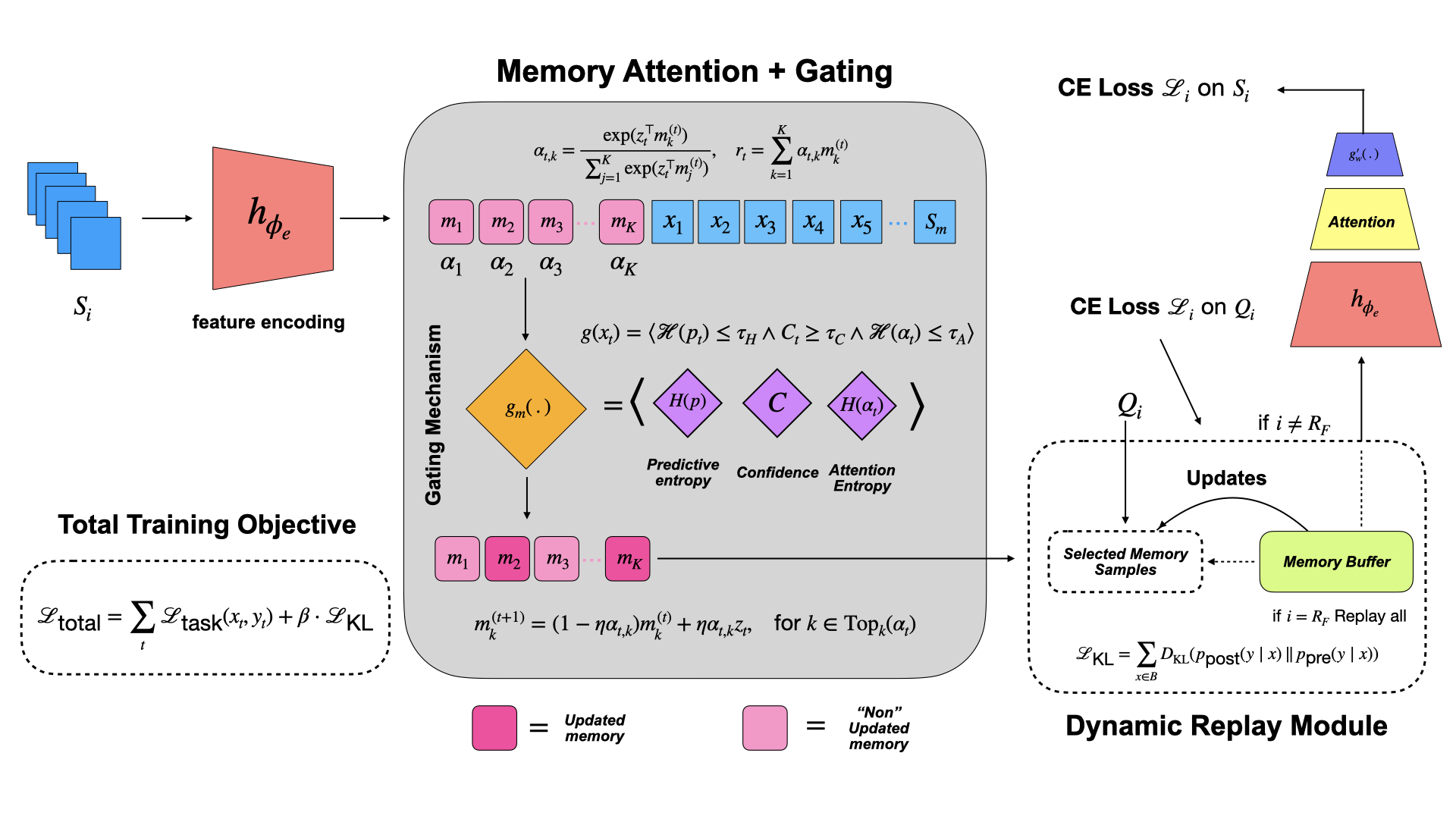

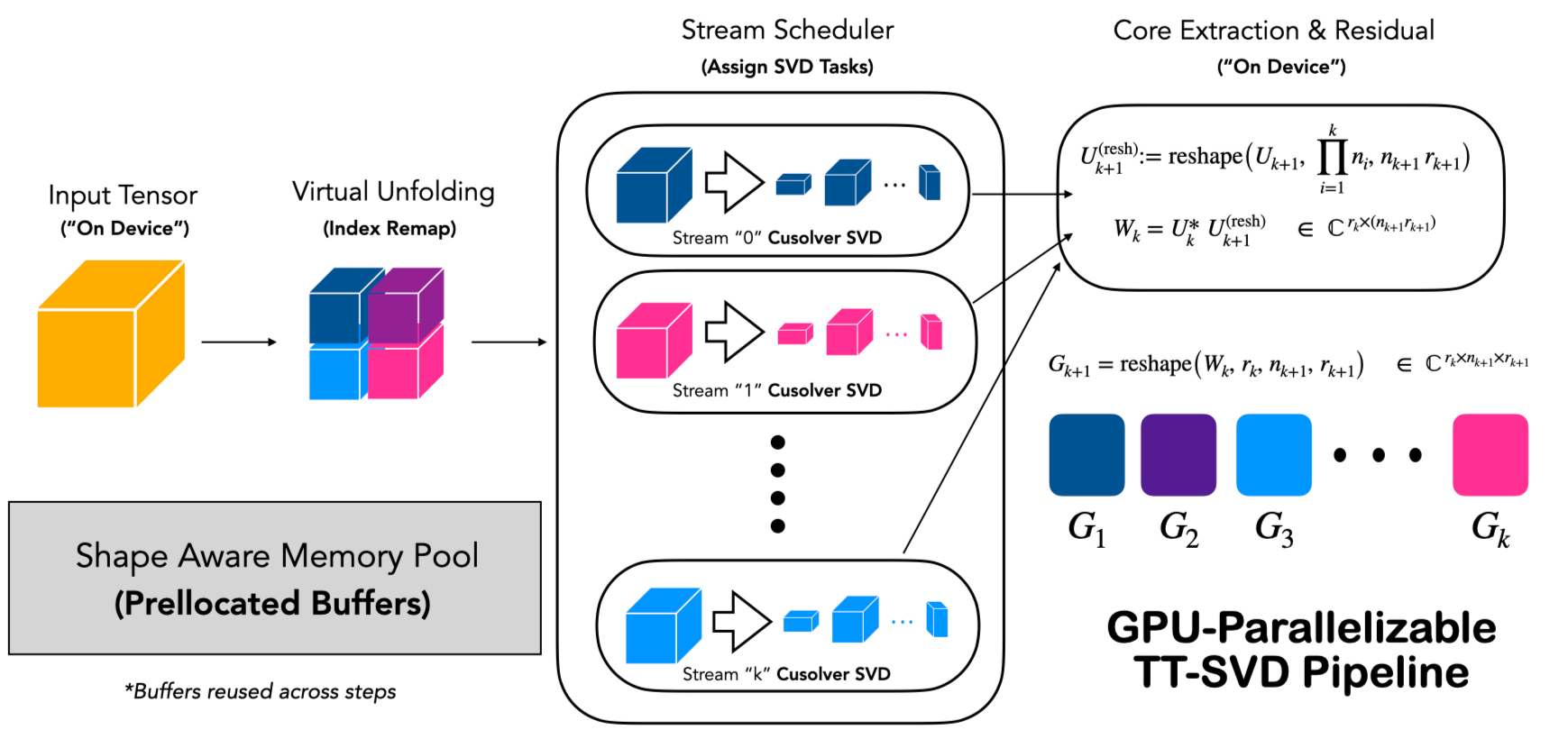

I am a Graduate Master’s student in Physical Sciences (minor in Computer Science) from NISER, Bhubaneswar, where I work on continual learning, efficient graph neural networks, and quantum-inspired models. My Master’s thesis develops a memory-augmented Transformer with entropy gated slot attention and hybrid replay strategies, achieving 93.5% accuracy with only 1.5% forgetting across NLP and vision benchmarks. I have worked on Fastpath, which reduces latency in unconstrained pruned models without sacrificing accuracy, currently under review at WSDM 2026. I have worked on Quaternion Message Parsing Neural Networks (accepted at PAKKD 2025), demonstrating higher expressiveness at reduced parameter cost and verifying the existence of Graph Lottery Tickets. I also contributed to Local and Global State Space Models (LoGo), combining Mamba’s scalable memory with Transformer architectures. Earlier research includes adiabatic quantum computing with carbon nanotube qubits, Kuramoto synchronization in nonlinear systems, and machine learning for band-gap prediction, the latter published in IEEE Xplore. My broader interests lie in building scalable, theoretically grounded AI systems and advancing high-performance computing through efficient tensor operation methods on GPUs.

Research

IC-CGU (IEEE Xplore) 2024

🎤 Oral Presentation

Estimation of Electronic Band Gap Energy From Material Properties Using Machine Learning

S. P. Barad, S. Kumar, and S. Mishra

Proposed an ML architecture to predict material band gaps and their types from easily computed material properties—removing the need for expensive DFT calculations.

WSDM 2026 (under review)

FastPath: Efficient Channel Reordering for Pruned Neural Networks via Graph Learning

S. P. Barad and S. Mishra

Proposed Fastpath, a graph reordering and selective pruning method that cuts redundant node features in GNNs. Applied to VisionGNN, our method yields significant latency reduction on ImageNet and CIFAR-10 while preserving accuracy.

TMLR 2025 (under review)

InfoGate: Information-Theoretic Gating for Continual Learning in Memory-Augmented Transformers

S. P. Barad and S. Mishra

InfoGate uses information-theoretic selective memory updates with entropy- and confidence-based gating plus KL-regularized consolidation to reduce catastrophic forgetting in continual learning benchmarks.

YRS Symposium / CODS-COMAD 2025

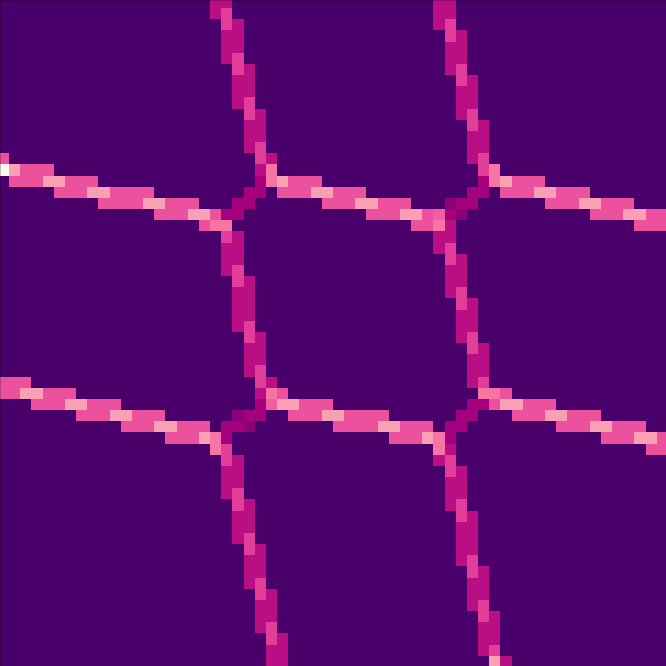

Parallel Tensor-Train Methods for High-Performance GPU Decomposition

Archana Pujari, S. P. Barad*, and S. Mishra

Presented an extended abstract parallel TT decomposition and tensor unfolding strategies with GPU-resident pipelines, randomized sketching, and optimized contraction strategies for large-scale tensor factorization.

Experience

Master’s Thesis Researcher — NISER Bhubaneswar • Bhubaneswar, India

Developing selective, replay-consolidation memory for robust continual learning. Built a memory-augmented Transformer with entropy-gated slot attention and hybrid replay strategies, achieving 93.5% accuracy with 1.5% forgetting on Wikitext-103, LQA, Split-/Permuted-MNIST.

Researcher — NISER Bhubaneswar • Bhubaneswar, India

Proposed Fastpath method, to improve efficiency of GNNs. Applied to VisionGNN, achieving significant latency reduction on ImageNet and CIFAR-10 while preserving accuracy. Under review at WSDM 2026.

Researcher — NISER Bhubaneswar & Microsoft India (collaboration) • Bhubaneswar, India

Worked on Quaternion Message Parsing Neural Networks (QMPNNs), showing greater expressiveness than real-valued models at 1/4th the parameters. Verified Graph Lottery Tickets in QMPNNs. Accepted at PAKDD 2025.

Summer Research Fellow — IIIT Bangalore • Bangalore, India

Explored adiabatic quantum computing with carbon nanotube qubits, demonstrating coherence and stability in simulations. Also modeled synchronization dynamics using Kuramoto oscillators.

Researcher — NISER Bhubaneswar • Bhubaneswar, India

Designed a machine learning framework for band-gap prediction from material properties without DFT dependence. Published in IEEE Xplore.

Portfolio

Topologically Ordered States in Qiskit

Implemented topological ordering and ground state preparation in Qiskit, simulating toric code systems up to 31 qubits for quantum error correction research.

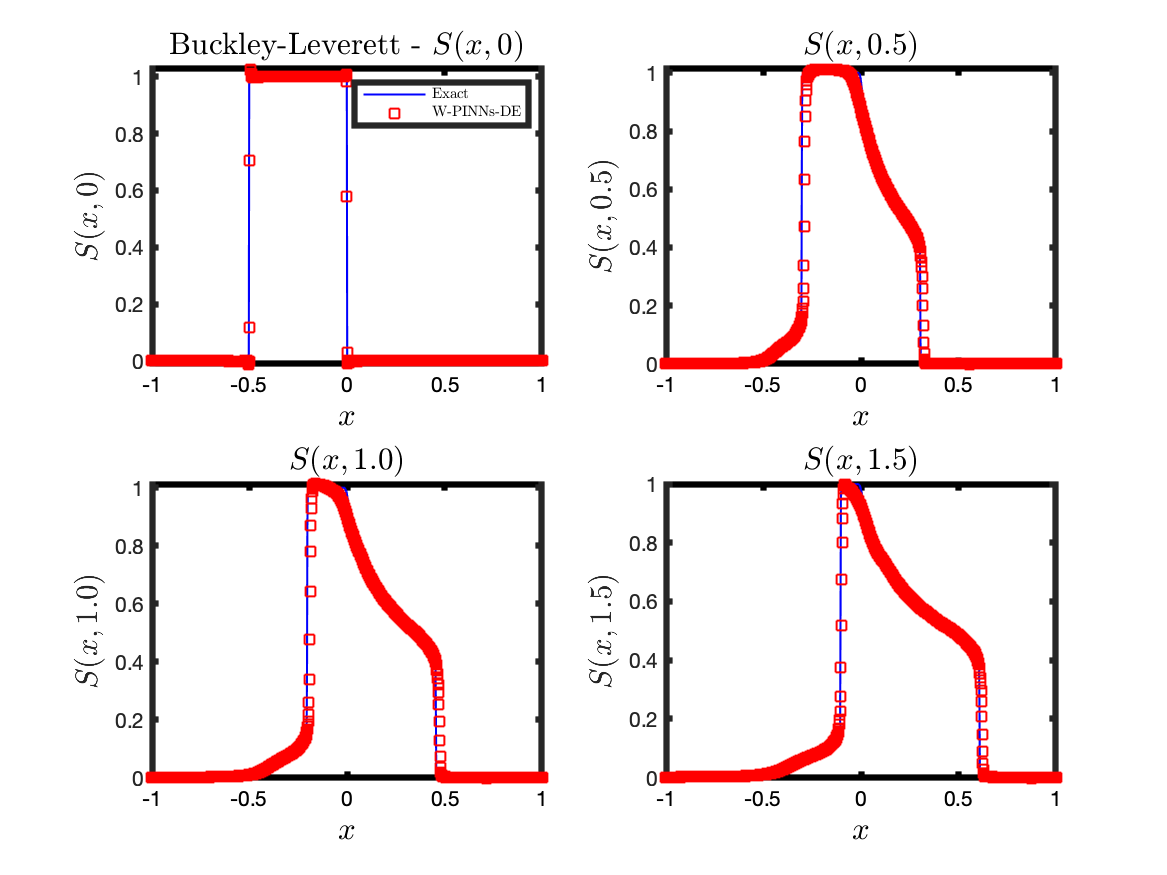

FV-PINNs

Combined Kuraganov–Tadmor flux solver with Physics-Informed Neural Networks to accurately handle shock discontinuities. Validated on shock-tube problems with improved stability and accuracy.

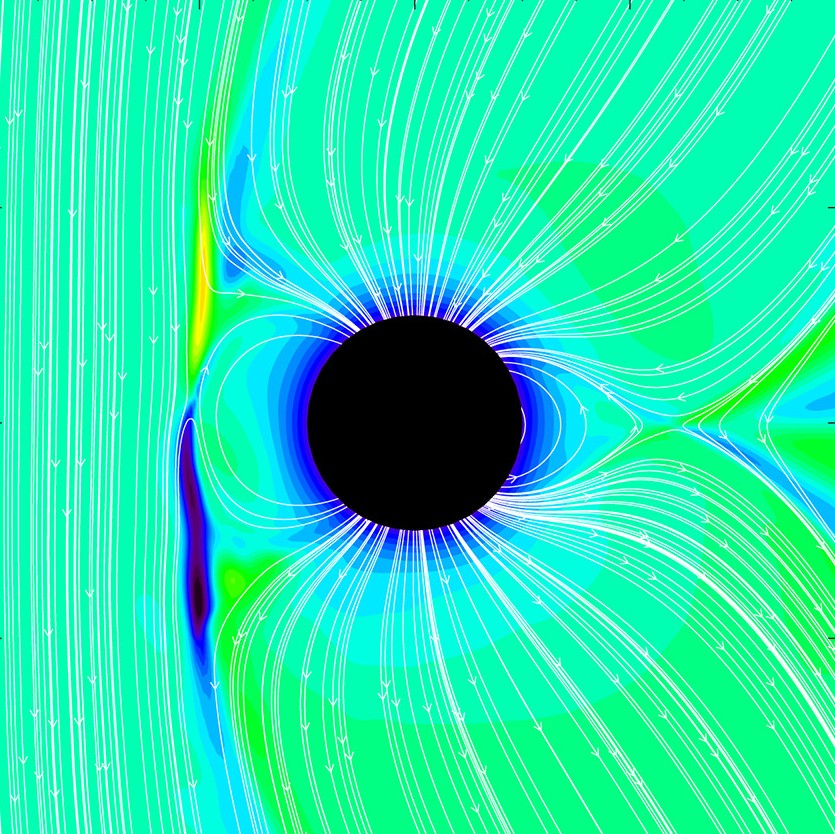

αΩ Galactic Dynamo Simulation

Investigated finite difference scheme sensitivity in galactic dynamo simulations. Explored how numerical stability and accuracy affect large-scale astrophysical magnetic field modeling.

Quantum Dot Dynamics Simulator

Built an interactive simulator for quantum dot properties with real-time parameter adjustments, validated against experimental datasets.